The headlines keep coming. DeepSeek’s models have been challenging benchmarks, setting new standards, and making a lot of noise. But something interesting just happened in the AI research scene that is also worth your attention.

Allen AI quietly released their new Tülu 3 family of models, and their 405B parameter version is not just competing with DeepSeek – it is matching or beating it on key benchmarks.

Let us put this in perspective.

The 405B Tülu 3 model is going up against top performers like DeepSeek V3 across a range of tasks. We are seeing comparable or superior performance in areas like math problems, coding challenges, and precise instruction following. And they are also doing it with a completely open approach.

They have released the complete training pipeline, the code, and even their novel reinforcement learning method called Reinforcement Learning with Verifiable Rewards (RLVR) that made this possible.

Developments like these over the past few weeks are really changing how top-tier AI development happens. When a fully open source model can match the best closed models out there, it opens up possibilities that were previously locked behind private corporate walls.

The Technical Battle

What made Tülu 3 stand out? It comes down to a unique four-stage training process that goes beyond traditional approaches.

Let us look at how Allen AI built this model:

Stage 1: Strategic Data Selection

The team knew that model quality starts with data quality. They combined established datasets like WildChat and Open Assistant with custom-generated content. But here is the key insight: they did not just aggregate data – they created targeted datasets for specific skills like mathematical reasoning and coding proficiency.

Stage 2: Building Better Responses

In the second stage, Allen AI focused on teaching their model specific skills. They created different sets of training data – some for math, others for coding, and more for general tasks. By testing these combinations repeatedly, they could see exactly where the model excelled and where it needed work. This iterative process revealed the true potential of what Tülu 3 could achieve in each area.

Stage 3: Learning from Comparisons

This is where Allen AI got creative. They built a system that could instantly compare Tülu 3’s responses against other top models. But they also solved a persistent problem in AI – the tendency for models to write long responses just for the sake of length. Their approach, using length-normalized Direct Preference Optimization (DPO), meant the model learned to value quality over quantity. The result? Responses that are both precise and purposeful.

When AI models learn from preferences (which response is better, A or B?), they tend to develop a frustrating bias: they start thinking longer responses are always better. It is like they are trying to win by saying more rather than saying things well.

Length-normalized DPO fixes this by adjusting how the model learns from preferences. Instead of just looking at which response was preferred, it takes into account the length of each response. Think of it as judging responses by their quality per word, not just their total impact.

Why does this matter? Because it helps Tülu 3 learn to be precise and efficient. Rather than padding responses with extra words to seem more comprehensive, it learns to deliver value in whatever length is actually needed.

This might seem like a small detail, but it is crucial for building AI that communicates naturally. The best human experts know when to be concise and when to elaborate – and that is exactly what length-normalized DPO helps teach the model.

Stage 4: The RLVR Innovation

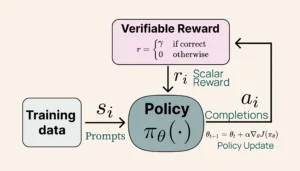

This is the technical breakthrough that deserves attention. RLVR replaces subjective reward models with concrete verification.

Most AI models learn through a complex system of reward models – essentially educated guesses about what makes a good response. But Allen AI took a different path with RLVR.

Think about how we currently train AI models. We usually need other AI models (called reward models) to judge if a response is good or not. It is subjective, complex, and often inconsistent. Some responses might seem good but contain subtle errors that slip through.

RLVR flips this approach on its head. Instead of relying on subjective judgments, it uses concrete, verifiable outcomes. When the model attempts a math problem, there is no gray area – the answer is either right or wrong. When it writes code, that code either runs correctly or it does not.

Here is where it gets interesting:

- The model gets immediate, binary feedback: 10 points for correct answers, 0 for incorrect ones

- There is no room for partial credit or fuzzy evaluation

- The learning becomes focused and precise

- The model learns to prioritize accuracy over plausible-sounding but incorrect responses

RLVR Training (Allen AI)

The results? Tülu 3 showed significant improvements in tasks where correctness matters most. Its performance on mathematical reasoning (GSM8K benchmark) and coding challenges jumped notably. Even its instruction-following became more precise because the model learned to value concrete accuracy over approximate responses.

What makes this particularly exciting is how it changes the game for open-source AI. Previous approaches often struggled to match the precision of closed models on technical tasks. RLVR shows that with the right training approach, open-source models can achieve that same level of reliability.

A Look at the Numbers

The 405B parameter version of Tülu 3 competes directly with top models in the field. Let us examine where it excels and what this means for open source AI.

Math

Tülu 3 excels at complex mathematical reasoning. On benchmarks like GSM8K and MATH, it matches DeepSeek’s performance. The model handles multi-step problems and shows strong mathematical reasoning capabilities.

Code

The coding results prove equally impressive. Thanks to RLVR training, Tülu 3 writes code that solves problems effectively. Its strength lies in understanding coding instructions and producing functional solutions.

Precise Instruction Following

The model’s ability to follow instructions stands out as a core strength. While many models approximate or generalize instructions, Tülu 3 demonstrates remarkable precision in executing exactly what is asked.

Opening the Black Box of AI Development

Allen AI released both a powerful model and their complete development process.

Every aspect of the training process stands documented and accessible. From the four-stage approach to data preparation methods and RLVR implementation – the entire process lies open for study and replication. This transparency sets a new standard in high-performance AI development.

Developers receive comprehensive resources:

- Complete training pipelines

- Data processing tools

- Evaluation frameworks

- Implementation specifications

This enables teams to:

- Modify training processes

- Adapt methods for specific needs

- Build on proven approaches

- Create specialized implementations

This open approach accelerates innovation across the field. Researchers can build on verified methods, while developers can focus on improvements rather than starting from zero.

The Rise of Open Source Excellence

The success of Tülu 3 is a big moment for open AI development. When open source models match or exceed private alternatives, it fundamentally changes the industry. Research teams worldwide gain access to proven methods, accelerating their work and spawning new innovations. Private AI labs will need to adapt – either by increasing transparency or pushing technical boundaries even further.

Looking ahead, Tülu 3’s breakthroughs in verifiable rewards and multi-stage training hint at what is coming. Teams can build on these foundations, potentially pushing performance even higher. The code exists, the methods are documented, and a new wave of AI development has begun. For developers and researchers, the opportunity to experiment with and improve upon these methods marks the start of an exciting chapter in AI development.

Frequently Asked Questions (FAQ) about Tülu 3

What is Tülu 3 and what are its key features?

Tülu 3 is a family of open-source LLMs developed by Allen AI, built upon the Llama 3.1 architecture. It comes in various sizes (8B, 70B, and 405B parameters). Tülu 3 is designed for improved performance across diverse tasks including knowledge, reasoning, math, coding, instruction following, and safety.

What is the training process for Tülu 3 and what data is used?

The training of Tülu 3 involves several key stages. First, the team curates a diverse set of prompts from both public datasets and synthetic data targeted at specific skills, ensuring the data is decontaminated against benchmarks. Second, supervised finetuning (SFT) is performed on a mix of instruction-following, math, and coding data. Next, direct preference optimization (DPO) is used with preference data generated through human and LLM feedback. Finally, Reinforcement Learning with Verifiable Rewards (RLVR) is used for tasks with measurable correctness. Tülu 3 uses curated datasets for each stage, including persona-driven instructions, math, and code data.

How does Tülu 3 approach safety and what metrics are used to evaluate it?

Safety is a core component of Tülu 3’s development, addressed throughout the training process. A safety-specific dataset is used during SFT, which is found to be largely orthogonal to other task-oriented data.

What is RLVR?

RLVR is a technique where the model is trained to optimize against a verifiable reward, like the correctness of an answer. This differs from traditional RLHF which uses a reward model.

The post Allen AI’s Tülu 3 Just Became DeepSeek’s Unexpected Rival appeared first on Unite.AI.